Ecommerce Competitive Intelligence Platform - Amazon Case Study

A comprehensive competitive intelligence platform for Amazon sellers to track competitor pricing, inventory, and marketing strategies.

Project Overview

The project delivers a production-ready sentiment analysis system that convers raw customer reviews into strategic business intelligence. The system analyzes app reviews of multiple Google Play e-commerce apps (Amazon, eBay, Etsy, Temu and Shein) to provide competitive insights and customer satisfaction metrics that directly aid in marketing strategy, product development and service operations decision-making.

The system is easily configurable for any app type on Google Play and allows for the monitoring of more than five apps at any given time. Additionally, with major modifications, the system can be adapted for any time of reviews.

Intended Audience

This project is intended for the following audiences:

- Campaign Managers → need competitive insights for ad targeting and messaging strategy

- Marketing Managers → require customer satisfaction metrics for brand positioning

- Growth Hackers → seek data-driven opportunities for improving customer retention and boosting loyalty

- Technical Markets → need automated, systematic, in-depth analytics to replace manual research processes

- Product Managers → need customer feedback analysis to prioritize feature and roadmap decisions

- Operations Professionals → require performance monitoring and customer service feedback insights

Pain Points

Manual Analysis Burden:

- 8+ hours weekly manually analyzing competitor reviews with inconsistent quality

- Expensive third-party sentiment tools ($500-2000/month) with limited platform coverage

- Delayed insights affecting campaign timing and competitive response

Strategic Intelligence Gaps:

- Missing competitor weaknesses and platform-specific customer behaviors

- Reactive rather than proactive competitive and product strategy

- No systematic approach to feature prioritization or customer service optimization

Operational Inefficiencies:

- Slow turnaround from customer insight to actionable decisions

- Limited visibility into user experience issues and satisfaction drivers

- Difficulty measuring impact of improvements on customer perception

Objectives

Primary Goals

- Competitive Intelligence: Real-time sentiment tracking across 5 major e-commerce platforms

- Customer Experience Analysis: SERVQUAL-based business metrics (reliability, trust, usability)

- Marketing Insights: Platform-specific customer pain points and satisfaction drivers

- Automated Reporting: Replace manual review analysis with AI-powered insights

Business Impact

- Identify competitor weaknesses and market opportunities

- Track customer satisfaction trends by platform and rating

- Generate actionable recommendations for product/marketing teams

- Reduce manual analysis time from hours to minutes

The Approach & Process

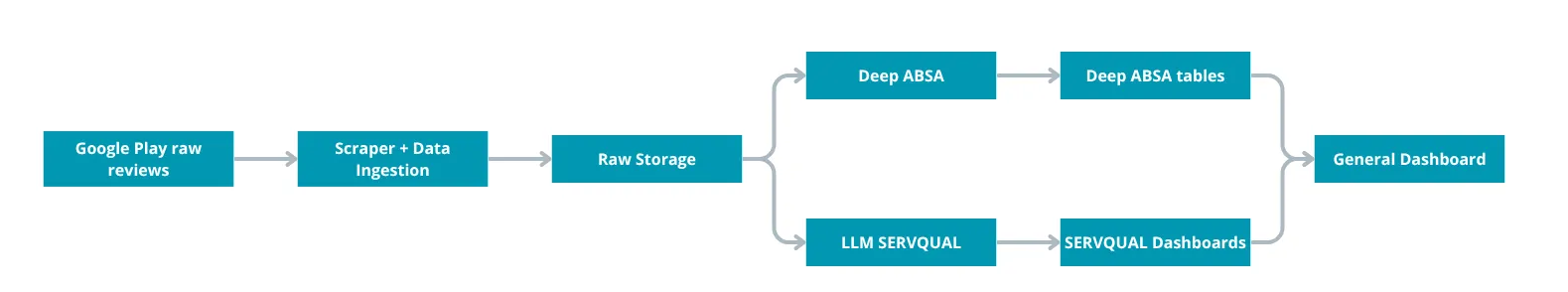

The system employs a dual-engine architecture that separates technical sentiment analysis from business intelligence generation, ensuring both granular insights and strategic overview for marketing teams.

Project Management

The development of the system took an approximate 3-4 weeks of full time work. It consisted of 5 main phases, conducted sequentially.

-

Phase 1 - Foundations and Infrastructure

- Goal - Main infrastructure and the basic data flow

- Deliverables - Scraper → Data Ingestion → Database → Basic dashboard version

-

Phase 2 - Core ABSA engine

- Goal - ABSA processing model

- Deliverables - Working ABSA analytics with batch processing

-

Phase 3 - SERVQUAL LLM Integration

- Goal - Test various open-source models and implement the most appropriate one for LLM-based SERVQUAL dimension classification via Ollama

- Deliverables - Production-ready LLM SERVQUAL analyzer

-

Phase 4 - Advanced Dashboards & Integration

- Goal - Fully integrate the LLM and create enhanced visualizations

- Deliverables - Production-ready pipeline with LLM SERVQUAL insights dashboards

-

Phase 5 - Testing and GitHub public publishing

- Goal - Test all the features, make adjustments where needed, prepare documentation and make the repository public

- Deliverables - Portfolio-ready project

To keep track of the progress, modifications and organize the allocated time and resources, Notion was used.

Data Collection & Preparation

Source

- Google Play Store reviews via automated scraping with the Google Play Reviews Scraper package, which uses the platform’s API

- Quality filtering for meaningful content (30-500 characters)

- Platform detection across Amazon, eBay, Etsy, Temu, Shein apps

- Daily batch processing with configurable interval

Data quality

- Automatic spam and low-quality review filtering

- Multi-language support with English focus

- Rating correlation validation (1-5 star scale)

- Historical data preservation for trend analysis

GDPR Compliance

The system discards automatically any identification or private aspects of user accounts that published the reviews, such as user names, and user IDs either by deleting them or by skipping their collection entirely. As such, no private or identification information is stored or used by the system.

Data Preprocessing & Storage

Storage Architecture

- PostgreSQL for structured review data and results

- Separate tables for technical analysis vs business intelligence

- Processing flags to track analysis completion status

- Checkpoint system for large batch recovery

Data Structure

- Review metadata: platform, rating, publication date, app details

- Processing timestamps: analysis date vs review publication date

- Quality indicators: review length, language, spam probability

- Platform context: app category, competitor identification

Data Processing

The system uses 2 separate methods to analyze the reviews based on the task:

- ABSA Engine - for technical analysis of reviews

- LLM - for Business Intelligence (BI) purposes to analyze the reviews using the SERVQUAL framework

ABSA Engine

What is ABSA?

ABSA is an acronym which stands for Abstract-based Sentiment Analysis. To understand what it does, let’s look at what the usual Sentiment Analysis consists of. Sentiment Analysis, sometimes referred to as Opinion Mining, is a practice that involves the analysis of text to determine if it expresses a positive, neutral or negative sentiment. In a systematic way, it’s usually done with NLP models.

ABSA, on the other hand, requires the use of more advanced NLP models which can extract more in-depth information about a text than a normal sentiment analysis model. For instance, let’s assume there is the following review:

The waiting times are horrible, we’ve waited for 50 mins, and prices are high, but the staff was friendly.

A normal sentiment analysis model would classify this as a negative review. However, the ABSA model would attribute as such:

- Waiting times - negative

- Prices - negative

- Staff - positive

This is, of course, a simplified explanation. A more complete explanation can be found on IBM’s website.

Importance in Marketing

NLP models that used for ABSA or sentiment analysis can save time and resources for many marketing teams, while providing important information. Their usage can include:

- The automatic calculation of Net Promoter Score (NPS)

- Campaign monitoring during special events, such as elections

- Brand monitoring

ABSA’s role in the current system

The ABSA engine uses a RoBERTa NLP model to identify the different nuances within a review between different aspects. On short, it looks and rates the following aspects based on the opinions expressed in the reviews:

- Aspect extraction: UI, performance, features, support, etc.

- Sentiment classification: positive, neutral, negative percentages

- Confidence scoring for reliability assessment

- Aggregated metrics for trend analysis

The LLM Engine for deep analysis

Large Language Models (LLMs) represent a deep learning model that uses transformer networks to learn and reproduce information. Their architecture makes them suitable for advanced tasks, such as text analysis, as they are able to understand language.

The LLM system uses the open-sourced Mistral 7B model to analyze the reviews using the SERVQUAL framework, accessed through Ollama. To achieve this, prompt engineering was needed to identify the best version for the task at hand. After a number of trials, using a combination of synthetic and real reviews, the model was integrated in the pipeline.

The LLM looks for the following:

- Reliability: Product quality and functionality issues

- Assurance: Customer trust, security, and support quality

- Tangibles: Interface design and user experience

- Empathy: Customer care and policy satisfaction

- Responsiveness: Speed and communication effectiveness

In the following snippet, it can be observed the final version of the used prompt, embedded in the prompting method.

def _create_servqual_prompt(self, review_text: str, platform: str, rating: int) -> str:

"""Create platform-aware SERVQUAL analysis prompt with better dimension detection."""

# More specific dimension definitions

dimension_definitions = {

'reliability': 'Product/service quality, functionality, accuracy, performance issues',

'assurance': 'Customer service, support, security, trust, professional help',

'tangibles': 'App interface, design, user experience, navigation, features',

'empathy': 'Personal care, return policies, understanding, accommodation',

'responsiveness': 'Delivery speed, response times, customer service communication, problem resolution'

}

# Platform-specific context with better targeting

platform_context = {

'amazon': "This is an Amazon review focusing on product quality and delivery",

'ebay': "This is an eBay review focusing on seller reliability and marketplace experience",

'etsy': "This is an Etsy review focusing on handmade quality and seller interaction",

'temu': "This is a Temu review focusing on value and delivery experience",

'shein': "This is a Shein review focusing on fashion quality and ordering experience"

}.get(platform, "This is an e-commerce review")

# Rating context for better sentiment calibration

if rating <= 2:

rating_context = "This is a negative review (1-2 stars) - look for problems and complaints."

expected_sentiment = "negative (-0.3 to -0.8)"

elif rating >= 4:

rating_context = "This is a positive review (4-5 stars) - look for praise and satisfaction."

expected_sentiment = "positive (0.3 to 0.8)"

else:

rating_context = "This is a neutral review (3 stars) - look for mixed feedback."

expected_sentiment = "neutral (-0.2 to 0.2)"

prompt = f"""You are analyzing a customer review for SERVQUAL service quality dimensions.

{platform_context}

{rating_context}

Expected sentiment range: {expected_sentiment}

Review Text: "{review_text}"

Analyze this review for these 5 SERVQUAL dimensions:

1. RELIABILITY: {dimension_definitions['reliability']}

2. ASSURANCE: {dimension_definitions['assurance']}

3. TANGIBLES: {dimension_definitions['tangibles']}

4. EMPATHY: {dimension_definitions['empathy']}

5. RESPONSIVENESS: {dimension_definitions['responsiveness']}

For each dimension:

- relevant: true if mentioned/implied in review, false otherwise

- sentiment: score from -0.8 (very negative) to +0.8 (very positive)

- confidence: your confidence in this analysis (0.7 to 1.0)

Return ONLY this JSON format:

reliability,

"assurance": relevant,

"tangibles": relevant,

"empathy": relevant,

"responsiveness": relevant

}}"""

In case the LLM fails to identify a dimension, a fallback method was added, which utilizes keywords to identify the SERVQUAL dimensions.

enhanced_keywords = {

'reliability': [

'quality', 'defective', 'broken', 'fake', 'authentic', 'durable', 'poor quality',

'accurate', 'description', 'as shown', 'misleading', 'photos', 'not as described',

'performance', 'crash', 'freeze', 'slow', 'responsive', 'buggy', 'glitch',

'works', 'working', 'doesnt work', "doesn't work", 'malfunction', 'error'

],

'assurance': [

'customer service', 'support', 'help', 'response', 'secure', 'customer care',

'safe', 'fraud', 'scam', 'price', 'expensive', 'value', 'trust', 'reliable',

'professional', 'knowledgeable', 'helpful', 'rude', 'unhelpful', 'service'

],

'tangibles': [

'interface', 'design', 'layout', 'navigation', 'search', 'website', 'app',

'filter', 'checkout', 'payment', 'easy', 'difficult', 'user friendly',

'confusing', 'intuitive', 'menu', 'page', 'loading', 'ui', 'ux'

],

'empathy': [

'personalized', 'recommendations', 'understanding', 'care', 'personal',

'attention', 'individual', 'considerate', 'flexible', 'accommodating',

'policy', 'return', 'refund', 'exchange', 'custom', 'tailored'

],

'responsiveness': [

'delivery', 'shipping', 'fast', 'slow', 'tracking', 'status', 'quick',

'problem', 'issue', 'resolution', 'response time', 'wait', 'delay',

'immediate', 'prompt', 'timely', 'speed', 'efficiency', 'contact'

]

}

Results

Accuracy Improvements:

- Reliability detection: 71% vs 10.5% baseline (+576% improvement)

- Trust analysis: 57.5% vs 18% baseline (+219% improvement)

- User experience: 58.5% vs 23% baseline (+154% improvement)

Business Intelligence Capabilities:

- Platform benchmarking: Compare performance across 5 major platforms

- Customer journey insights: Rating-specific pain point identification

- Competitive gaps: Identify areas where competitors are struggling

- Marketing opportunities: Highlight differentiators and messaging angles

Operational Benefits:

- Automated analysis: Replaces 8+ hours of manual review analysis

- Scalable processing: Handles thousands of reviews with checkpoint recovery

- Cost-effective: No ongoing API costs with local LLM deployment

- Real-time insights: Fresh competitive intelligence for campaign optimization

Dashboards & Outputs

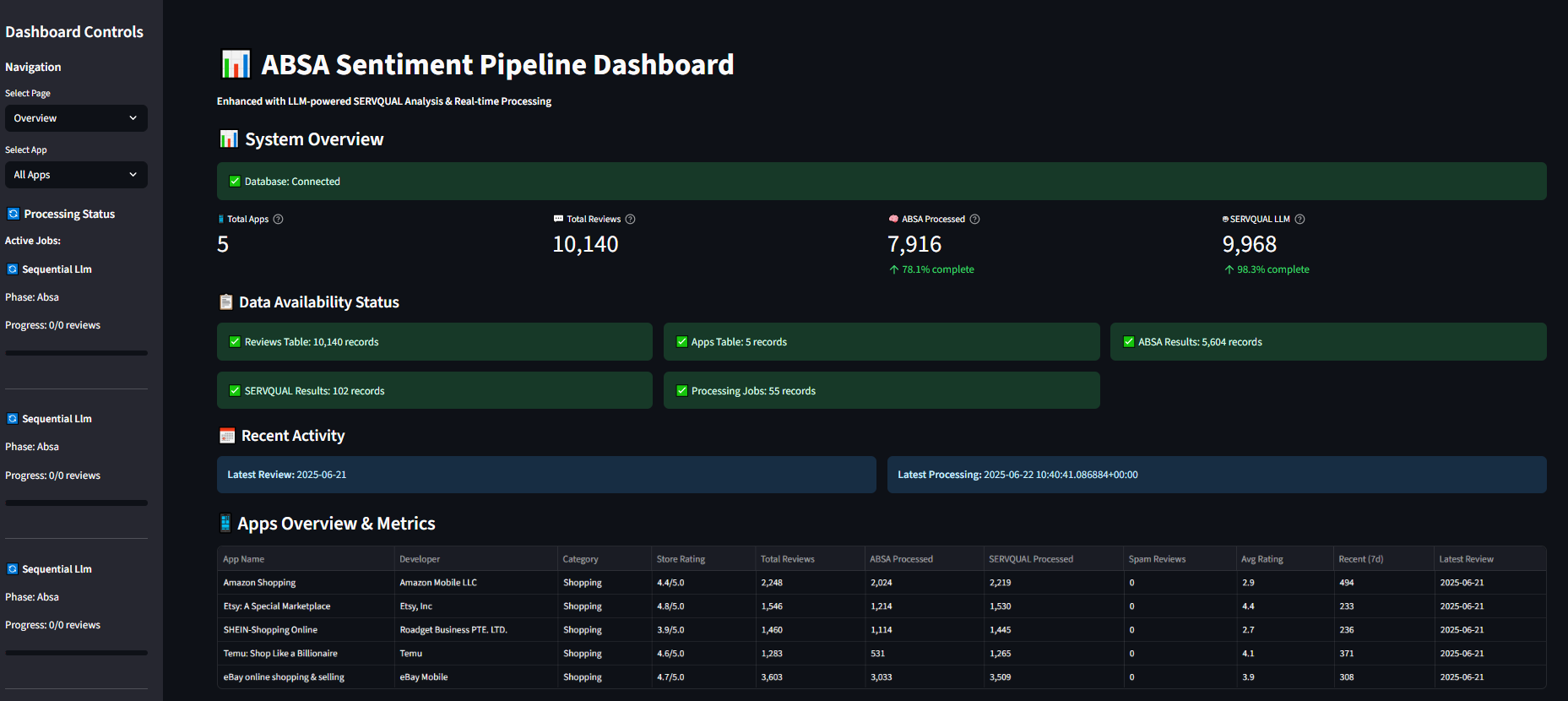

The overview tab presents general relevant metrics of the system, such as:

- Total apps monitored

- Total reviews stored

- ABSA processed

- SERVQUAL LLM processed

- Apps overview and review processing status for each app

Executive Summary & SERVQUAL

Key outputs of the SERVQUAL analysis:

- SERVQUAL radar charts for competitive positioning

- Platform performance matrix (Amazon vs eBay vs others)

- Trend analysis with carried-forward values for continuity

- Key insights and actionable recommendations

Main Company ABSA Insights

The system has an integrated option which shows the main followed company aspects, as if the primary users are part of that respective company. In the current case, the scenario follows Amazon.

The main section of the ABSA tab contains a heatmap with the Business Critical Aspects, as identified within the reviews. To allow Marketing and Operations professionals to go into further depth, a more advanced table was added just below. This table presents a more comprehensive perspective of the customer’s satisfaction with specific aspects of the business or the app. Additionally, an adjustable table was added to the page, which allows the selection of another app and their specific aspects, allowing marketers to compare their results with those of the competitors’ apps.

Deployment Requirements

Infrastructure

- Standard laptop/desktop (8GB+ RAM recommended)

- PostgreSQL and Redis databases

- Ollama runtime with Mistral 7B model (4GB storage)

- Python 3.8+ environment

Setup Time

- Initial deployment: 2-3 hours

- Model download: 30 minutes (one-time)

- Daily processing: Automated background operation

- Dashboard access: Immediate via web browser

Maintenance

- Weekly performance monitoring

- Monthly model performance review

- Quarterly prompt optimization updates

- Minimal ongoing technical requirements

Further Improvements & Recommendations

Immediate Enhancements

- Expanded platform coverage: Add direct competitor app analysis

- Sentiment trend alerts: Automated notifications for significant changes

- Custom reporting: Automated weekly/monthly executive summaries

- API integration: Connect to existing marketing tools and dashboards

Marketing Applications

- Competitive positioning: Use reliability scores for messaging strategy

- Feature prioritization: Focus development on high-impact trust issues

- Platform strategy: Optimize presence based on platform-specific insights

- Crisis management: Early detection of satisfaction drops and quality issues

Technical Enhancements

- Further LLM integration: Use the existing LLM integration to directly interpret the data and provide advices

- CLV analysis: Use the collected data to conduct CLV analysis, identifying how users behave

ROI Potential:

- Campaign optimization: Data-driven targeting based on competitor weaknesses

- Product development: Customer-validated feature prioritization

- Market expansion: Platform-specific go-to-market strategies

- Customer retention: Proactive addressing of satisfaction drivers

Technologies Used

- Backend: Python, PostgreSQL, AWS Lambda

- Visualization: Tableau, Power BI

- Data Processing: Pandas, NumPy

- Web Scraping: Selenium, BeautifulSoup